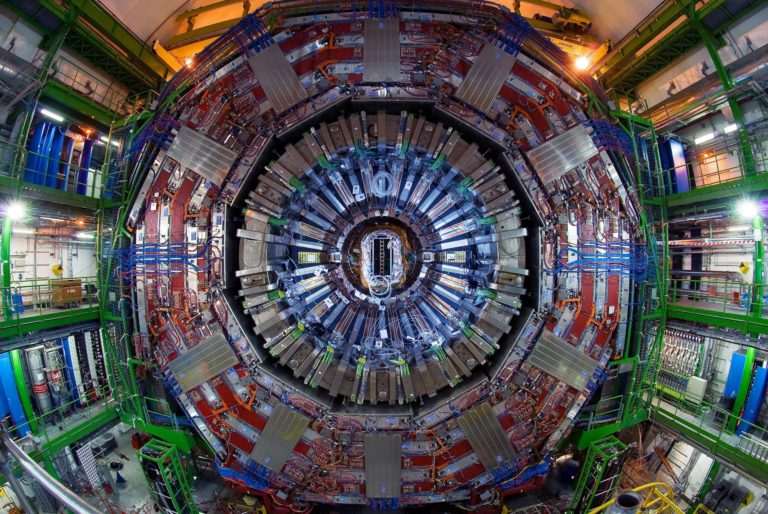

The world’s most important scientific facilities, from the CERN Large Hadron Collider to the National Radio Astronomy Observatory, deal with massive amounts of data every day that are mined, stored, analyzed and visualized. It’s a colossal task that requires help from the top minds in data management to handle.

|

| The CERN Large Hadron Collider in Switzerland is one of many of the world’s most important scientific facilities and research projects that will get help from team members from the University of Utah’s School of Computing and five other universities on how to best manage their scientific data. |

So the National Science Foundation (NSF) is turning to expert computer scientists from the University of Utah’s School of Computing and five other top universities, to help these facilities and other research projects manage their data in faster and more affordable ways.

Members of the U’s School of Computing are part of the new CI Compass, an NSF Center of Excellence dedicated to helping these research facilities cope with their “data lifecycle” more effectively.

“The NSF has invested hundreds of millions of dollars in large facilities, such as massive telescopes and oceanographic observatories. The problem is that each have become a technological island, and it’s difficult for them to complete their scientific mission and get up to speed in their data needs,” says U School of Computing professor Valerio Pascucci, who is director of the U’s Center for Extreme Data Management Analysis and Visualization and co-lead on the CI Compass project. “They don’t have sufficient internal expertise. So we work with each of them to advise them on the latest solutions and modernize their software infrastructure, to do things faster or cheaper, and to make sure they don’t become stale and outdated.”

Joining the U in this new center are researchers from Indiana University, Texas Tech University, the University of North Carolina at Chapel Hill, the University of Notre Dame, and the University of Southern California. In addition to Pascucci (pictured), the U team also includes School of Computing research associate professor Robert Ricci, and researchers Giorgio Scorzelli and Steve Petruzza.

Joining the U in this new center are researchers from Indiana University, Texas Tech University, the University of North Carolina at Chapel Hill, the University of Notre Dame, and the University of Southern California. In addition to Pascucci (pictured), the U team also includes School of Computing research associate professor Robert Ricci, and researchers Giorgio Scorzelli and Steve Petruzza.

The team will be helping as many as 25 of the NSF’s major facilities and research projects including the IceCube Neutrino Observatory in the South Pole, the National Superconducting Cyclotron Laboratory in Michigan, the Ocean Observatories Initiative, and the Laser Interferometer Gravitational-Wave Observatory at the California Institute of Technology.

Each of these facilities and projects deal with terabytes and even petabytes (one petabyte is a million gigabytes) of data that goes through a “data lifecycle” of being mined, analyzed, stored and distributed to the public, Pascucci says. CI Compass will not only help them update to the latest hardware and software to manage the data, the center’s team will also train facility and project researchers “to empower them to do things on their own,” he says. “Our goal is to make sure they don’t depend on us long term.”

The center will also help ensure that each of the facilities are not duplicating problems, are more easily sharing information, and not using incompatible technologies.

The idea for the CI Compass began three years ago with a pilot project that worked with five NSF-funded facilities. The goal was to identify how the center could serve as a forum for the exchange of cyberinfrastructure knowledge across varying fields and facilities, establish best practices, provide expertise, and address technical workforce development and sustainability.

During the pilot, the NSF discovered that each of the facilities differ in types of data captured, scientific instruments used, data processing and analyses conducted, and policies and methods for data sharing and use. However, the study also found that there are commonalities between them in terms of the data lifecycle. The results of the three-year evaluation led to CI Compass, its mission to produce an improved cyberinfrastructure for each facility.

CI Compass is funded by an $8 million, five-year NSF grant and will work in conjunction with another NSF center, the Center for Trustworthy Cyberinfrastructure, that will advise these facilities on all issues of cybersecurity.